Code reviews take time, and most of that time is spent pointing out small things like missing semicolons, forgotten edge cases, and other formatting mistakes instead of focusing on real problems in the code.

For most of us, this can be frustrating and makes the whole process feel less productive. That’s why more developers are turning to AI code review tools.

These tools handle the boring and repetitive checks, so you can spend more time on logic, structure, and writing better code. They help you catch bugs early, stick to your coding standards, and move faster without losing quality.

If you’ve already started using AI to automate work tasks or save time with smart tools, then bringing AI into your code review process is just the next smart move.

Let’s break down how it works and how to get the most out of it.

What Is AI Code Review and How It Works

AI code review is exactly what it sounds like. It's when you use artificial intelligence to help check your code for problems before it goes live. Instead of doing everything by hand, you let smart tools scan your code and flag issues automatically.

These tools look for things like bugs, performance problems, security risks, or even code that just doesn't follow your team's style. Some can even suggest changes or explain why something needs to be fixed.

Most AI code reviewers connect directly to your existing tools like GitHub or GitLab. Some work inside your IDE, while others run in your CI pipeline after you push a commit. That way, you get instant feedback without needing to wait for a teammate to jump in.

Itamar Friedman, co-founder of Qodo, puts it this way: "AI is especially useful for pull requests in the code review process, particularly for longer PRs that human reviewers find overwhelming."

Think of it as an extra pair of eyes that never gets tired and helps you stay consistent across the board.

Key Benefits of AI Code Review Tools

AI code review tools offer clear advantages that cut through the pain of manual reviews:

- Faster feedback and fewer bugs

Development data shows engineers spend about half their time debugging, yet traditional review tools catch only around 10 % of critical bugs. AI steps in early in the process, surfacing issues before they become costly down the line. That means less unplanned work and more reliable delivery

- More consistent quality

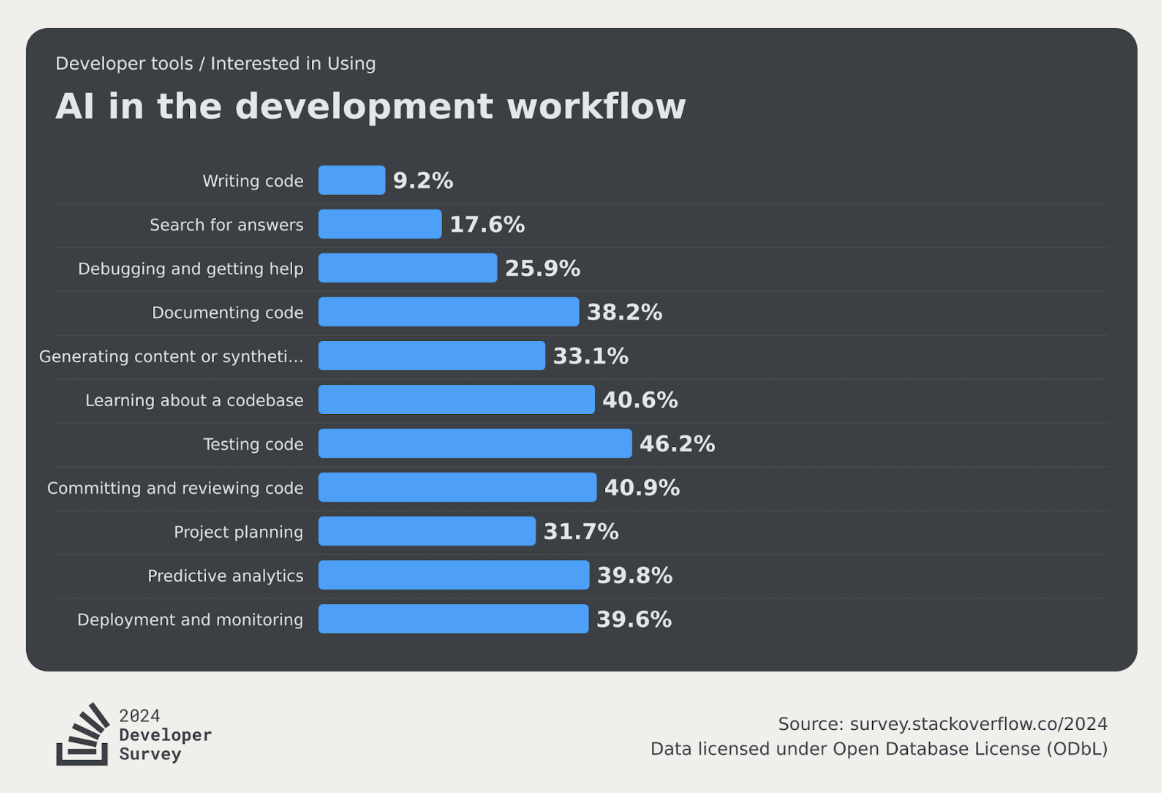

AI tools enforce coding standards uniformly so you won’t hit review fatigue or missed catches. Around 40.9 % of developers say they are interested in using AI for code review because of this predictable consistency.

This chart is based on data from the 2024 Stack Overflow Developer Survey. It shows how developers use AI in their coding work. The original dataset is shared under the Open Database License (ODbL) v1.0, which lets people reuse it freely, as long as credit is given. We made some small changes to the chart to make it easier to read.

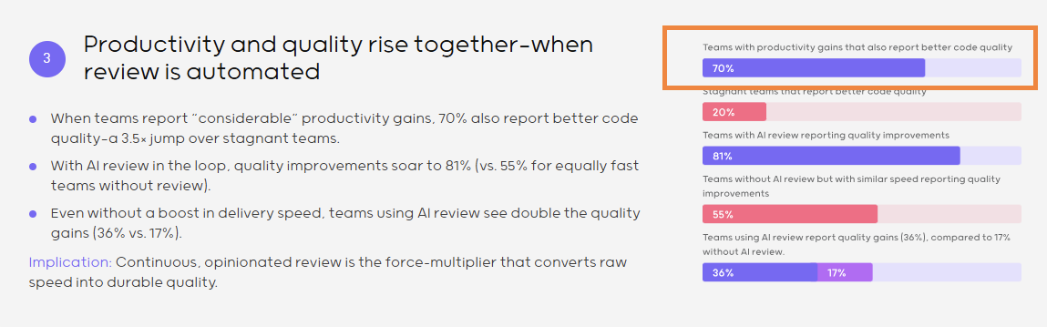

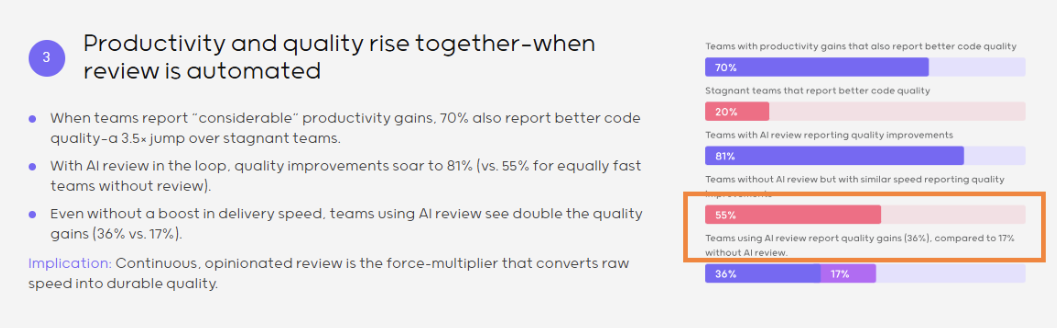

Teams reporting productivity gains with AI also saw quality improve in 70 % of cases, which is an incredible multiplier effect

Source: State of AI code quality in 2025 - Qodo

- Exceeded quality lifts

One report found that teams using AI code review achieved a quality improvement rate of 81 % compared to just 55 % for equally fast teams without automated reviews

Source: State of AI code quality in 2025 - Qodo

- Scalability for large codebases

These tools can scan hundreds of lines in seconds, which human reviewers can’t reliably keep up. This also helps when reviewing long pull requests where fatigue or context switching can lead to mistakes

Overview of Popular AI Code Reviewer Tools

There are plenty of tools out there claiming to help with code reviews, but not all are built the same. Some focus on catching bugs, others help you write better tests, and a few go deeper with full project understanding.

Here's a quick look at some of the top options developers are trying right now:

Pro Tip: Some teams go beyond code review and use AI agents for broader automation, like automating web tasks or even job applications. If you’re already comfortable using AI in other areas, these tools should feel like a natural next step.

Got it. Here's Section 5: How to Integrate AI Code Review into Your Workflow — written in a simple, natural tone, and with relevant mentions including Sigma Browser where it fits.

How to Integrate AI Code Review into Your Workflow

Adding AI code reviews to your process doesn’t mean you have to rebuild everything from scratch. You just need to slot it into your current workflow in a way that helps, not slows it down. Here’s how to do it step by step.

1. Start with your version control

Most AI code review tools work best when integrated directly into your Git platform. If you're using GitHub, GitLab, or Bitbucket, use tools like CodeRabbit, SonarQube, or CodeScene to hook right into your pull request process.

They’ll leave comments automatically, so that your team gets feedback right where they already work.

Pro tip: Set the AI to suggest changes, not apply them automatically. This gives your team reviewers the final say.

2. Use IDE integrations for on-the-fly feedback

If you want suggestions while you code, you can use tools with IDE extensions, like GitHub Copilot or Qodana. They highlight issues in real time so that you can fix problems early, not just during review.

Cursor is another strong option if you want AI in your IDE that feels more like a chat assistant. This kind of early detection makes the actual code review stage faster and smoother.

3. Automate repetitive checks

Use AI to take care of things like formatting, style rules, or obvious logic errors. That way, you can focus on more important tasks like architecture, naming, and edge cases.

Pairing AI tools with other automations can really streamline things. For example, developers who use Sigma Browser often combine AI-powered reviews with browser-based agents to automate time-consuming tasks like generating code documentation or fetching related tickets from a project board.

This frees you up to focus on actual code quality.

4. Give your team time to adjust

Every team is different. Some developers will love AI right away, others might feel like it’s getting in the way. The key is to start small, maybe try it on just a few pull requests per week, and build from there.

Run internal experiments. Track how long reviews take with and without AI, and get feedback.

Common Mistakes to Avoid When Using AI in Code Reviews

AI tools can be incredibly helpful, but only if you use them the right way. Here are some common missteps people make when adding AI into their code review process and how you can avoid them.

1. Relying too much on AI

Just because the AI points something out doesn’t mean it’s always right. And the opposite is also true. AI isn’t perfect. It can miss context, misunderstand complex logic, or suggest changes that go against your team’s best practices.

What to do instead: Treat AI like a second pair of eyes. Helpful, fast, and consistent, but it shouldn’t be your final decision maker.

“Think of AI like a junior developer who’s never tired but still learning.”

— Ravi P., senior backend engineer

2. Ignoring false positives

Some AI tools flag things that aren’t actually problems. Over time, your team might start tuning out these alerts, and that can be dangerous. If developers stop paying attention, they could miss real issues.

What to do instead: Adjust the AI settings. Most tools let you tweak what gets flagged, or even train the AI on your specific codebase to reduce noise.

3. Not giving feedback on AI suggestions

This one’s easy to overlook. Many AI tools actually learn from how you interact with their suggestions. If your team keeps rejecting a certain kind of feedback, the tool may eventually stop giving it if it knows you’re rejecting it for a reason.

What to do: Hit the thumbs up or down button if the tool has one. Or better yet, leave a quick comment. It helps the AI improve over time.

4. Forgetting to update AI tools

AI tools evolve quickly. New models are constantly being trained to understand more complex code, follow best practices, and reduce false positives. But if you're still using an old version, you're missing out.

What to do: Make regular updates part of your dev process. At least once a month, check for new releases or improvements.

5. Using AI as a shortcut instead of a support tool

Some teams start using AI to cut corners. Instead of writing clear code or thoughtful commit messages, they just let the AI clean it all up after the fact. That defeats the purpose.

Key takeaway: AI should make your team sharper, not lazier. Use it to catch more, fix faster, and improve learning, not to skip the work.

Real-World Examples of Teams Using AI for Code Review

Let’s talk about how teams are using AI in the real world. This isn’t just theory; it’s already happening across companies of all sizes, from startups to big tech giants.

- Atlassian: boosting review speeds

Atlassian’s Bitbucket Cloud now lets you suggest code changes right inside a pull request. That means they can make quick edits without jumping back into their IDE. This means things can move faster, especially when you’re working in a team spread across different time zones.

- Shopify: AI-driven code suggestions

Shopify built an internal AI assistant that scans code during pull requests and offers inline suggestions. You can accept or reject the suggestions directly from the PR view.

- Sourcegraph Cody: helping devs navigate large codebases

Teams using Sourcegraph’s Cody get context-aware help across massive monorepos. It suggests fixes, explanations, and even rewrites entire functions, while understanding the repo’s structure.

This kind of AI reviews your code and helps you understand and improve it in real time.

- Google: Internal tools for code quality

Google has been using AI-assisted review tools internally for years. Their systems check for security issues, performance bottlenecks, and style consistency. This automatically flags anything that falls outside what’s not accepted.

When AI Code Review Doesn’t Work: Limitations and What to Watch Out For

AI tools are smart, but they’re not perfect. And when you’re dealing with code, even small mistakes can cost time, money, or worse, security risks.

Here are a few things AI still struggles with:

- Context and intent: AI can suggest fixes that technically work but don’t align with your project’s goals or business logic. It can’t always read between the lines like a person would.

- Overcorrections: Sometimes the AI might flag clean code as an error or suggest changes that actually make things worse. This can be frustrating for devs who already followed best practices.

- Lack of accountability: If something goes wrong after merging AI-reviewed code, who takes responsibility? That’s a question many teams still wrestle with.

- Bias and training data limits: Most AI tools are trained on open-source data. So if your codebase is unique or built in a less popular language, the AI might not offer much help.

Did you know?

A study by NYU Researchers found that AI tools like Copilot can introduce errors in up to 40% of code suggestions if used without human review. Security flaws like SQL injections and command injections were among the top vulnerabilities found in Copilot-generated code.

How to Successfully Integrate AI into Your Code Review Workflow

Adding AI to your code review process isn’t just about picking a tool and turning it on. It works best when you build it into your workflow with a bit of planning.

Here’s how to do that:

Bonus Tip:

If your team works mostly inside a browser, Sigma Browser can be a handy place to test or run AI review tools without switching between apps. It’s designed for flexible, AI-driven workflows right from your browser.

Final Thoughts

AI code reviewers are no longer just a nice-to-have. They’re becoming a real part of modern development workflows, saving time, catching mistakes, and helping teams ship better code.

But like any tool, they work best when combined with human judgment. Whether you’re a solo dev, part of a startup, or working in a big engineering team, it’s worth trying these tools out.

You don’t have to replace your entire review process overnight. Just start small. See how AI fits into your flow, tweak things as you go, and build from there.

The right balance of AI and human review can take your code quality up a notch without slowing you down.

FAQs

- Are AI code reviewers accurate enough to trust?

They’re pretty solid when it comes to syntax, formatting, and common bugs. But they can still miss complex issues or misunderstand context. That’s why they work best as a second pair of eyes, not the only one.

- Can AI fully replace human code reviewers?

Not really. AI can do a lot, but it still lacks real-world context and creative problem-solving. It’s best used to speed up reviews and handle routine checks, while humans focus on deeper decisions.

- What’s the learning curve like for using AI code review tools?

Most tools are easy to set up and use. Some plug right into GitHub, your IDE, or platforms like Sigma Browser, so you don’t have to change your workflow much.

- Is using AI code review safe for private or company code?

That depends on the tool. Always check their data policy. Some process code locally or within your private network, while others send data to the cloud.

- What’s a good first step if I want to try one?

Pick a tool that fits your current setup. Try it on a small project or branch. See how it handles the code, and invite feedback from your team to refine how it’s used.