In recent years, the term local AI has started to appear more frequently in technical discussions and product descriptions. At a basic level, local AI refers to models that run directly on a user’s device or within a controlled local environment, rather than relying on remote cloud infrastructure. This shift did not happen suddenly. It emerged as a response to growing concerns around data privacy, constant dependence on internet connectivity, and the rising cost of cloud-based AI systems. Tools like Sigma Eclipse reflect this change by bringing AI execution back onto the user’s machine instead of outsourcing it to external servers.

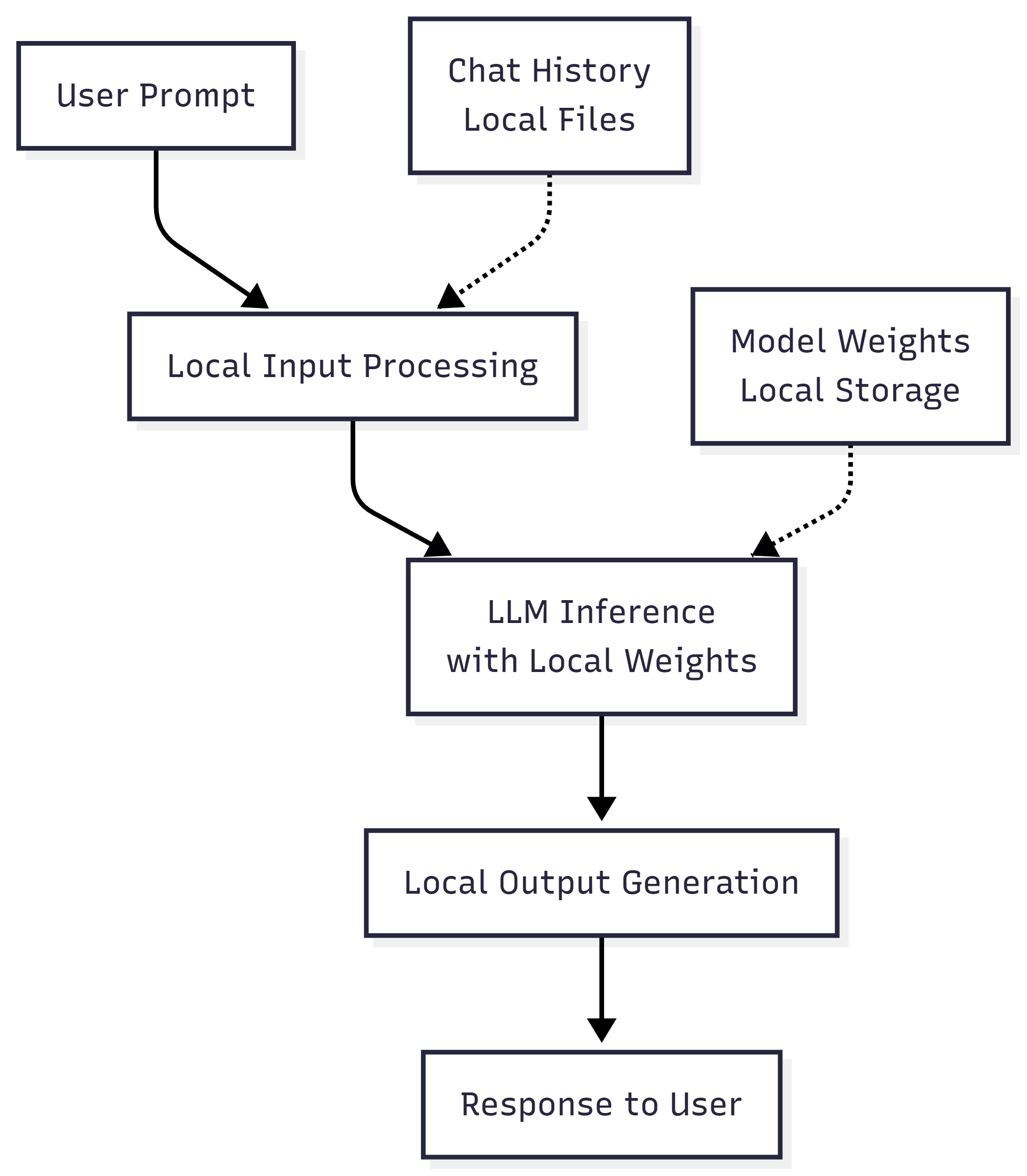

From a functional perspective, local LLMs are capable of many of the same tasks as cloud-based models. They can generate text, answer questions, analyze documents, and assist with writing. The difference lies not in what the model can do, but in where and how it does it. With local AI, prompts, contextual information, and generated outputs are processed on the device itself, rather than being transmitted to an external service.

.png)

Local LLM and User Data

Local LLMs are typically distributed as model files that are downloaded and loaded into a runtime environment on the user’s system. Once installed, the model can operate without a continuous network connection. This architectural difference changes how data flows through the system and how trust is established compared to cloud-based AI.

Because computation happens locally, the handling of data depends entirely on the user’s hardware and configuration. More powerful machines can run larger or faster models, while less capable devices may rely on smaller or optimized versions. Regardless of model size, the core principle remains the same: all processing happens locally, without external dependencies.

Another important aspect is that local AI does not rely on remote logging or request-based monitoring to function. Since there is no need to send prompts to a server, users do not have to consider how their inputs might be stored, reviewed, or reused elsewhere. Control over data lifecycle and retention stays within the local system.

Why Local LLMs Became Feasible Only Recently

The idea of running language models locally is not new, but until recently it was largely impractical. Earlier generations of large models required substantial computational resources that were only available in data centers. Memory constraints, hardware costs, and software complexity made local execution inaccessible for most users.

Several developments changed this situation. Open-source language models became widely available, allowing experimentation outside closed cloud platforms. At the same time, optimization techniques such as quantization reduced the hardware requirements for inference. Consumer hardware also improved significantly, making it possible to run complex models on personal devices.

As a result, local LLMs transitioned from experimental setups to practical tools. What once required specialized infrastructure can now run on laptops and workstations, enabling new ways to use AI without relying on constant internet access or external processing.

Hardware Requirements and Optimization for 2025

Running local large language models in 2025 requires an understanding of your hardware, because all computation happens on your own machine. Unlike cloud AI, performance, speed, and model size are limited by what your computer can handle.

Below are the four main hardware components that matter when running local LLMs, explained in practical terms.

Graphics Processing Unit (GPU)

The GPU is the most important component for running local AI models. It is responsible for performing the mathematical operations required for text generation. While CPUs can run small models, GPUs are significantly faster and are required for larger models.

VRAM refers to video memory on the GPU. It determines how large a model your system can load.

B stands for billions of parameters. More parameters generally mean a more capable model, but they also require more VRAM.

Typical requirements

- Entry level: RTX 3060 Ti (16 GB VRAM) – suitable for 7–13 B model parameters

- Recommended: RTX 4090 (24 GB VRAM) – handles 30 B+ models efficiently

- High performance: H100 (80 GB VRAM) – for enterprise deployment with the largest models

- Alternative: Multiple RTX 3090s (24 GB each) for cost-effective scaling

Why this matters

If your GPU does not have enough VRAM, the model simply will not load. This is the most common limitation users encounter.

How to check your GPU and VRAM

System Memory (RAM)

RAM is your computer’s general working memory. While VRAM stores the model itself, system RAM supports data processing, caching, and multitasking during inference.

If you run out of RAM, the system may slow down dramatically or fail to run the model properly.

Typical requirements

- 16 GB minimum for basic 7B models

- 32 GB recommended for 13–30 B parameter models

- 64 GB for 70 B+ parameter models

- 128 GB+ for production deployments with multiple concurrent users

Why this matters

Even if your GPU is strong, insufficient RAM can bottleneck performance or cause crashes.

How to check your RAM

Processor Requirements

The CPU coordinates tasks, manages data flow, and supports parts of inference that are not handled by the GPU. While the CPU is not the primary bottleneck, modern instruction support and multiple cores improve overall stability and responsiveness.

Typical requirements

- AVX2 instruction support (standard on modern CPUs)

- Multi-core processors for efficient parallel processing

- AMD Ryzen 9 7950X3D or Intel Core i9-13900K recommended

- Server-grade AMD EPYC or Intel Xeon for enterprise use

Why this matters

A weak CPU can slow down loading, preprocessing, and multitasking, even with a strong GPU.

How to check your CPU

Storage Configuration

Local models are large files. Storage speed directly affects how quickly models load and how responsive the system feels.

NVMe SSDs are significantly faster than traditional HDDs or SATA SSDs and are strongly recommended.

Typical requirements

- NVMe SSD mandatory for model loading and inference

- Minimum 1TB capacity for model storage

- Separate OS and model drives recommended

- High-speed networking for distributed deployments

Why this matters

Slow storage increases startup times and can interrupt workflows when loading or switching models.

How to check your storage

Popular Open-Source LLM Families for Local Use

While many local LLMs require manual selection and tuning based on hardware constraints, some systems abstract this complexity away from the user. In Sigma Eclipse, the local model is automatically configured to match the technical capabilities of the user’s device. This allows the system to balance performance and responsiveness without requiring users to choose a specific model or adjust parameters themselves. As a result, Sigma Eclipse delivers consistent local AI behavior across different machines while avoiding unnecessary slowdowns or resource overload.

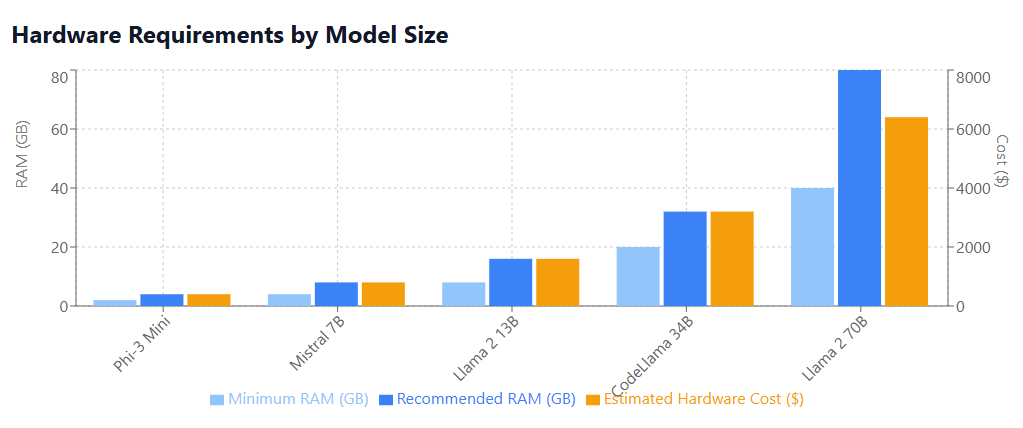

The hardware requirements for some of the LLM families are shown here:

Local AI as an Architectural Choice

Local AI is more about the way it's designed than the features it offers. It changes where computation happens, how data is handled, and who controls the execution environment. These changes can impact things like trust assumptions, operational constraints, and the types of tasks that can be done comfortably.

As these large language models become more available, they offer an alternative to cloud-based systems for users who value autonomy, privacy and offline capability. It's easier to know when this approach is appropriate if you understand how local AI works in practice.

Rather than treating local models as a separate technical setup, some modern tools apply this architecture directly inside everyday applications. Sigma Eclipse is an example of this approach in practice. When activated in a browser, the model runs on the user’s device and can be used for tasks such as summarising web pages, drafting text, organising information or automating routine browser actions. These tasks are processed locally without any prompts, context or results being sent to external servers. This makes local AI not only usable for experimentation, but also for daily work scenarios where privacy, predictability or limited connectivity are important considerations.

.png)

.avif)