AI tools are now a regular part of how we work. People use them to write messages, analyse documents, summarise web pages, and automate routine tasks. Most of the time, these tools do the job, and users usually don't think about how the results are produced or where their data actually goes. Sigma Eclipse is an example of a local AI system that runs entirely on a user's device, keeping prompts, context and outputs private while still providing the same types of AI assistance.

There have been a few big incidents recently that show how risky it is to rely on cloud-based AI and data services. One time, security researchers found a Chinese AI startup's database exposed on the public internet with no authentication, which meant that user chat histories, API keys, system logs and other sensitive information were accessible to anyone who discovered it. This shows how cloud storage misconfigurations can lead to broad data exposure.

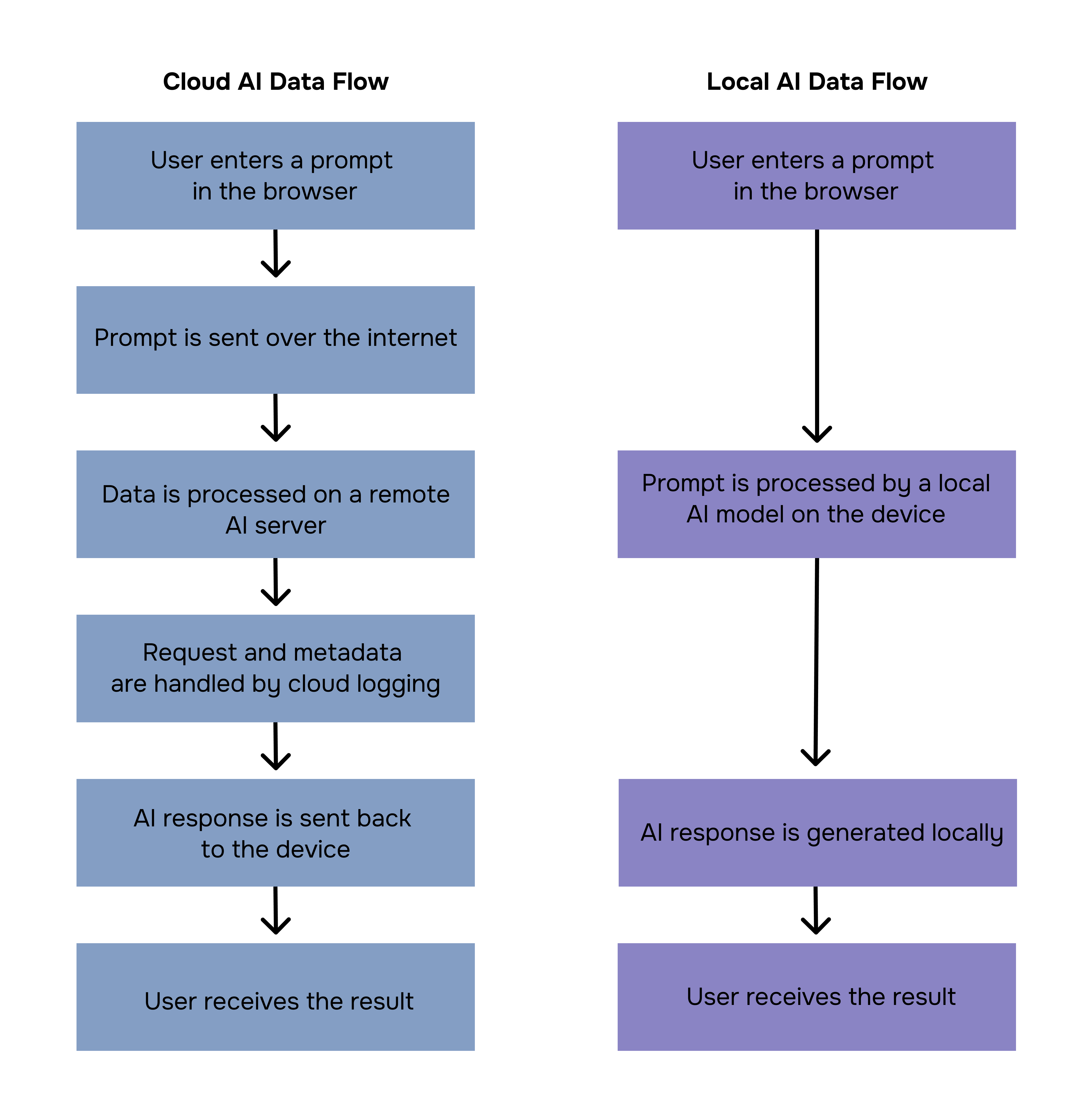

Understanding where AI computation takes place helps clarify what happens to prompts, contextual information, and generated outputs. It also explains why some AI systems need constant internet access and ongoing payments, while others, like Sigma Eclipse, can operate offline and without per-request costs. This article looks at the differences between cloud AI and local AI by focusing on data flow.

In short, a comparison between Local AI and Cloud AI might look like this:

But let's take a closer look at the differences.

Where Your Prompt Goes

When you use a cloud-based AI system, the user's prompt is sent from the device to a remote server for processing. This is done over the internet and normally includes the full text of the request. Once it's processed, the response is sent back to the user's application or browser. User data may potentially be accessed by its employees, automated monitoring systems, third-party cloud infrastructure providers, legal or regulatory authorities, and, in some cases, integrated partners or external analytics services.

With a local AI model, the prompt always stays on your device. The text is processed straight away in the system's memory by a model that's already been installed locally. You don't need to do any external communication to complete the request.

This difference is important when you're dealing with prompts that have personal or sensitive information. For example, drafting a private email, analysing an internal document, or working with account-related text all involve data that some users may not want to transmit outside their device.

What Context Is Shared With the Model

AI systems often rely on more than just the text a user types. Context can include surrounding content, such as a webpage being viewed, a document currently open, or previous interactions within the same session.

In cloud-based AI systems, this contextual information may also be transmitted to external servers so the model can generate more relevant responses. Depending on the implementation, this can include page content, metadata, or interaction history associated with the request.

Storage, Logging, and Retention

Once a prompt has been processed, how that data is handled depends on the architecture of the AI system. When it comes to cloud-based AI, requests and responses often go through logging systems. You can use these logs for things like monitoring, debugging, stopping abuse, or making services better. How long we keep this data depends on the provider and is normally dictated by their own rules and legal obligations.

Even if data isn't meant to be stored long-term, it might still be around in server memory or logs as part of the usual system operations. For the user, this means that copies of their input and output can be stored outside their device, even if only for a limited time.

With local AI, there's no need for server-side infrastructure when it comes to processing requests. Any prompts and contextual data will be handled entirely within the device's runtime environment. Once you've finished a task, no outside system keeps a record of the interaction unless you save the output. This means that data doesn't stick around in places outside of the current session.

Internet Dependency and Control

Cloud-based AI systems require a persistent internet connection to function. Because everything's done remotely, the service depends on network access, server uptime and external system performance. If the connection is interrupted, the AI becomes unavailable.

Once installed, local AI operates independently of network connectivity. You can do tasks even when you're not connected to the internet, and the system will keep going even when the device is fully offline. This gives users control over when and how the AI is used.

The internet also makes it harder to verify things. With local AI, you can check that processing is happening on-device by turning off network access and seeing that the system keeps on working. This is a useful way to check data flow assumptions without relying too much on policy statements.

Cost and Data as a Resource

The way an AI system is designed also affects how much it costs to run. Cloud-based AI services usually charge you either per request or based on how long it takes to do something. Each query uses server resources, and the cost can vary depending on the provider and how much you use it. If you use the system a lot, these costs can rack up fast, and you'll need to keep an eye on how it's being used.

Local AI, on the other hand, runs completely on the user's device, using whatever computing power it's got. There's no extra charge for using the local model. The main things holding it back are the device's processing power and memory, not external service costs. This means users can run tasks without worrying about usage limits or extra charges, making local AI easy to predict financially and operationally.

Choosing Where Your AI Lives

The algorithm for both models can be illustrated using the following scheme:

The main difference between cloud and local AI is where computation occurs and, as a result, where data flows. Local AI, like Sigma Eclipse, handles all processing on the user's device, giving them total control over their data. You can enable Eclipse mode in Sigma Browser to make sure that AI tasks are done locally.

With Sigma Eclipse, you can get the best of both worlds, combining autonomy, privacy and convenience. While cloud AI is still better for really big computations or services that need a lot of external integration, Sigma Eclipse shows that local AI can handle daily tasks efficiently while keeping all data on the device. Knowing the differences between them helps users decide which AI architecture is best for them and meets their privacy needs.

.avif)

.png)